Building internal AI tools with Streamlit

Most companies have a ton of valuable data internally. This could be analytics data on your customers interactions with your product. This could be an audit log of actions taken within the product (which is another way to see when different features are enabled).

Even if you are a small startup, you likely have valuable data in the form of support tickets - which can show you the areas of the product that need the most attention. You also likely have feature requests scattered throughout those support tickets.

Pre-LLMs, trying to extract insights from any data required specialized knowledge. You often needed to train your own model, which meant feature engineering & NLP, choosing a model, and most onerous of all… gathering your own training data.

Nowadays, you can just write a prompt like

Categorize the following ticket using these categories: Uptime, Security, Bug, Feature Request, Other

{put ticket here}

And boom, you have a classifier - and it’s probably decent without much tuning (although you absolutely should modify it).

In this post, we’ll look at how you can use Streamlit to build an internal tool that allows anyone at the company to experiment with using LLMs on top of any dataset you give access to.

What we’re building today

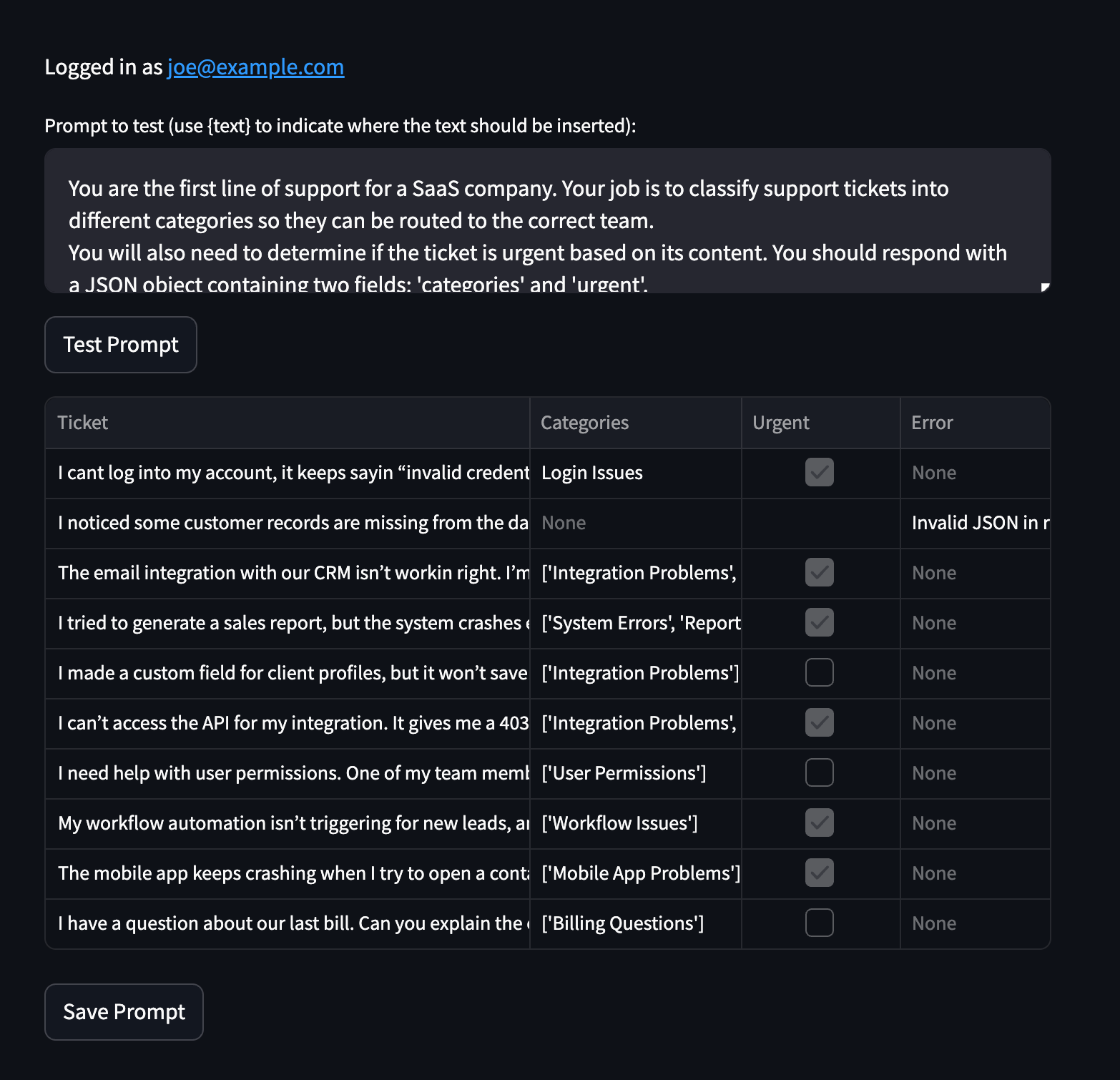

Users should be able to:

- Log in, so we know who they are and what data they have access to

- Write a prompt. In this case, it’s for a ticket classification system.

- Test the prompt on some sample data and see the output (including errors).

- Save the prompt for others to use.

Before we jump into the code, let’s first look at what Streamlit is.

A very quick intro to Streamlit

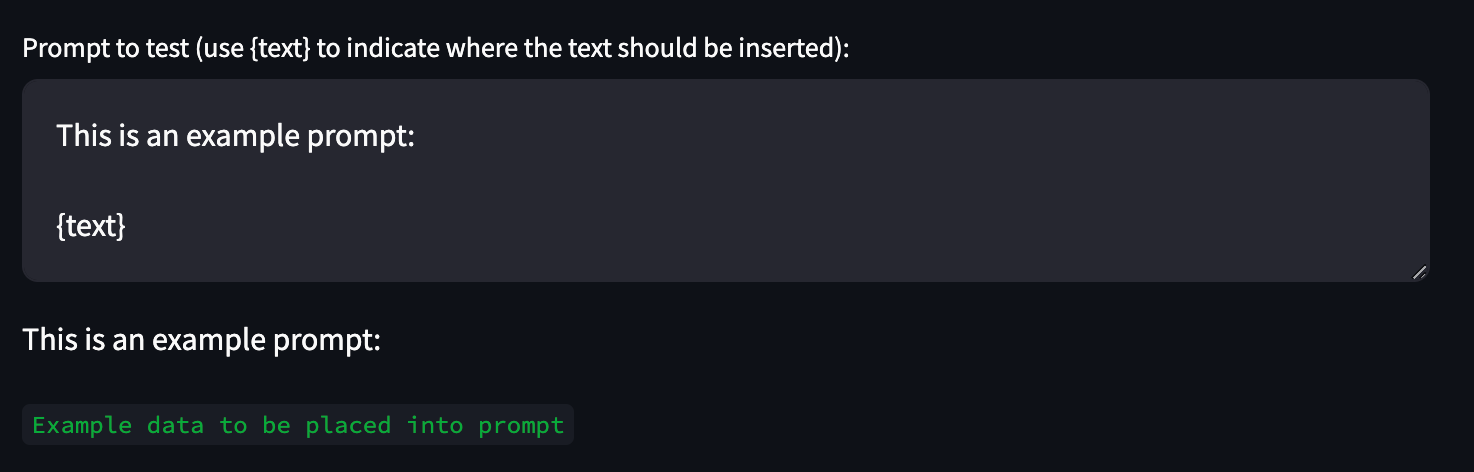

Streamlit is a fantastic tool for quickly building data applications. You get to write code like:

import streamlit as st

prompt = st.text_area(

"Prompt to test (use {text} to indicate where the text should be inserted):",

"This is an example prompt:\\n\\n{text}",

)

prompt_with_data = f"{prompt}".format(

text="`Example data to be placed into prompt`"

)

st.write(prompt_with_data)

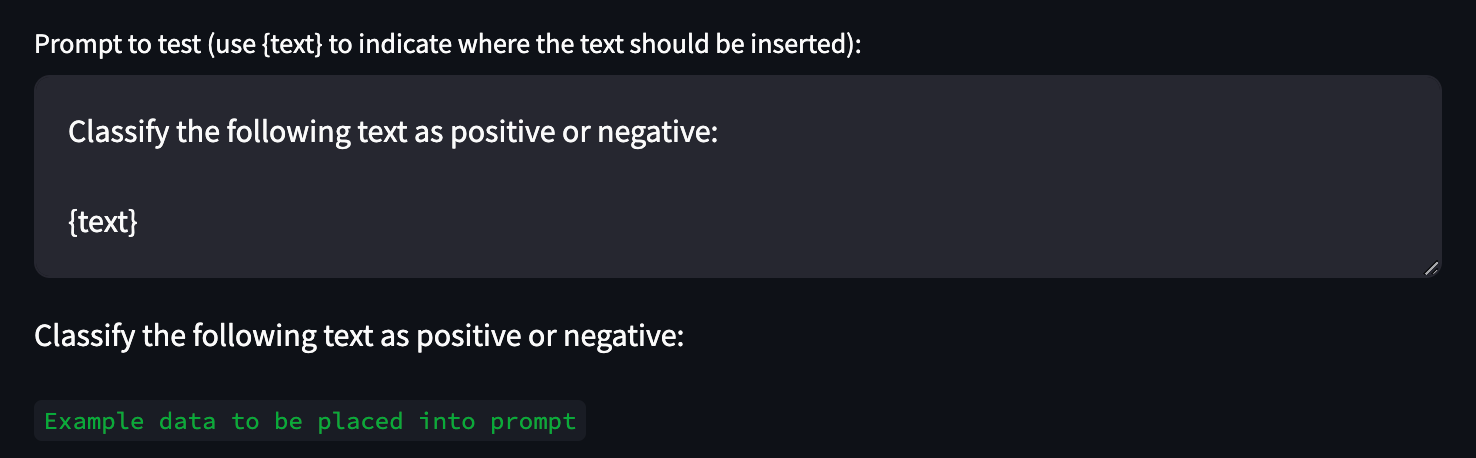

and Streamlit automatically creates an interactive frontend:

If a user were to update that prompt text_area, the rest of the Python code will re-run:

This is incredibly powerful for building tools like interactive dashboards. There’s a number of other components that you can use, like rendering a pandas dataframe as a table or a button to trigger actions off of.

There’s also a set of utilities for loading data from external sources, managing secrets, and caching data. The combination of all of these makes for a very powerful tool for interacting with your data.

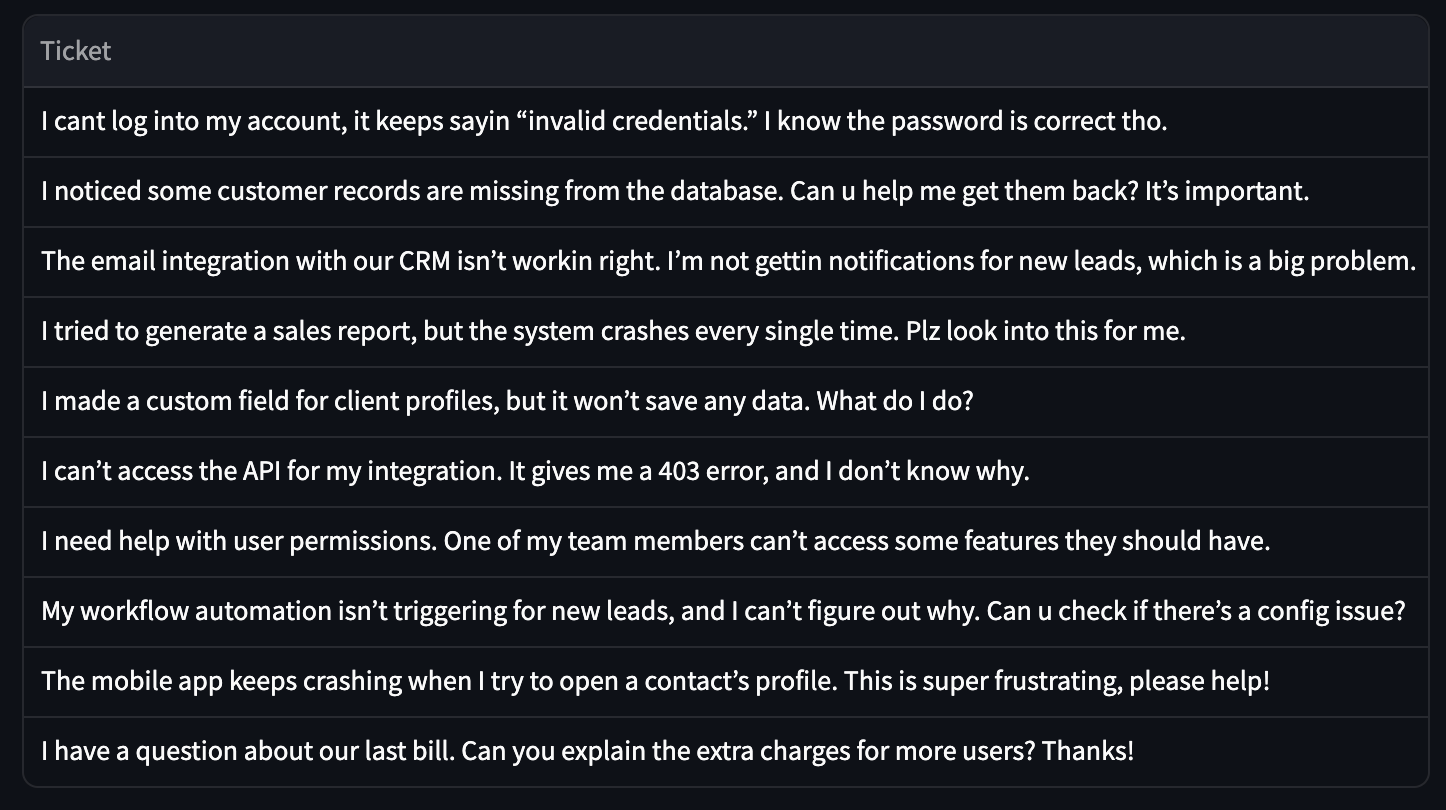

Step 1: Loading and visualizing our data

What good is a data application without data? To get a sense for what we can do, let’s start by hard coding some data:

import streamlit as st

def load_data_sample():

return pd.DataFrame(

{

"Ticket": [

"I cant log into my account, it keeps sayin “invalid credentials.” I know the password is correct tho.",

# ...

],

}

)

# Load the data

data = load_data_sample()

# render it as a table

st.dataframe(data, use_container_width=True, hide_index=True)

And this will render a table with all the tickets we hard coded:

Streamlit does have built in support for a number of data sources. For example, if we wanted to connect to Postgres, we’d first tell Streamlit how to connect to our Postgres database via the .streamlit/secrets.toml file:

[connections.postgresql]

dialect = "postgresql"

host = "localhost"

port = "5432"

database = "xxx"

username = "xxx"

password = "xxx"

We’d install pip install psycopg2-binary (which is needed to connect to Postgres).

And then we can update our load_data_sample() function:

def load_data_sample():

conn = st.connection("postgresql", type="sql")

# Results are cached for 5 min

return conn.query('SELECT description as Ticket FROM tickets;', ttl="5m")

Similarly, we can connect to Snowflake or even a Google sheet. It’ll always end up as a dataframe that we can easily visualize.

A brief note on caching

The query call has a built-in caching mechanism in the form of a TTL. There are, however, two other options for caching: st.cache_resource and st.cache_data.

cache_resource is commonly used for caching connections - so you can use it for caching the database connection or for the OpenAI client that we’ll construct later on.

cache_data is commonly used for caching the result of expensive queries. You can annotate the load_data_sample function which will speed up subsequent requests to load it.

Step 2: Running our data through the prompt

We started by taking in a prompt from the user. Then we loaded the data. Now it’s time to execute the prompt on that data.

For us, we’re going to ask our users to make sure their prompt outputs valid JSON with the form:

{

"urgent": false,

"categories": ["CategoryA", "CategoryB"]

}

We can do a fairly simple transformation of our dataframe which adds 3 columns: urgent, categories , and error (in the event that something went wrong).

@st.cache_data

def classify_ticket(ticket):

# Construct an OpenAI client

openai_key = st.secrets["openai_key"]

openai_client = OpenAI(api_key=openai_key)

# Format the prompt to include the ticket

response = openai_client.chat.completions.create(

messages=[{"role": "user", "content": prompt.format(text=ticket)}],

model="gpt-4o-mini",

)

response = response.choices[0].message.content

if response is None:

return {"error": "No response from OpenAI"}

try:

response = json.loads(response)

except json.JSONDecodeError:

return {"error": "Invalid JSON in response"}

if "urgent" not in response:

return {"error": "No 'urgent' field in response"}

if "categories" not in response:

return {"error": "No 'categories' field in response"}

return response

def modify_data_to_include_classification(data):

for i, row in data.iterrows():

text = row["Ticket"]

response = classify_ticket(text)

data.at[i, "Categories"] = response.get("categories")

data.at[i, "Urgent"] = response.get("urgent")

data.at[i, "Error"] = response.get("error")

Easy enough! The big question now is… how frequently do we want this to run? We’ve cached the most expensive part of this (both time and money-wise), which is the call to OpenAI.

But, we want to be careful to not run this every time someone makes a small change to the prompt. The easiest fix? Let’s just add a button to trigger the re-running of the prompt on the data.

Streamlit makes this really easy:

data = load_data_sample()

if st.button("Test Prompt"):

modify_data_to_include_classification(data)

st.dataframe(data, use_container_width=True, hide_index=True)

st.button will return True when the button is clicked and will modify the Dataframe to add new columns. A user that has never clicked Test Prompt will see just the unclassified sample data for inspiration.

And that’s… most of it!

A user can open up our app, view some sample data, write a prompt, and see the results of running that prompt on the sample data. The only thing that’s a bit scary, is we haven’t added any authentication. Any user can interact with our data and we don’t know who wrote which prompts.

Step 3: Adding authentication

One quirk of Streamlit today is that authentication is difficult. The out of the box options aren’t great for a company building an internal app, where you might want sign in with Okta or Azure via SSO/SAML or you might want to make sure 2FA is required before using the application.

PropelAuth is a great fit here as PropelAuth provides full authentication UIs that you can use directly with Streamlit.

Looking to set up a really basic application with just email and password login? The code will look like this:

user = auth.get_user()

if user is None:

st.error('Unauthorized')

st.stop()

st.write('Logged in as ', user.email)

Looking to set up an advanced application that requires users to log in via SAML? The code snippet is the same!

For a full guide on how to get started, check out our documentation here. The gist is that we will create a file propelauth.py which will export an auth object.

At the top of our script, we just need to make sure we can load the user or stop the rest of the script from running. We can then user the user’s ID in any of queries to the data to make sure they are only seeing data they have access to.

from propelauth import auth

# Is this a valid user? If not, make sure the script stops

user = auth.get_user()

if user is None:

st.error('Unauthorized')

st.stop()

st.write('Logged in as ', user.email)

# ...

def load_data_sample(user_id):

conn = st.connection("postgresql", type="sql")

return conn.query(

'SELECT description as Ticket FROM tickets WHERE user_id = :user_id;',

params={"user_id": user_id}

ttl="0"

)

data = load_data_sample(user.user_id)

Step 4: Saving the prompt

As an optional last step - let’s say we wanted to provide a way for users to save their prompts. We can re-use basically everything we’ve learned so far and make this really easy:

if st.button("Save Prompt"):

conn = load_connection() # e.g. st.connection("postgresql", type="sql")

with conn.session as session:

session.execute(

"INSERT INTO prompts (user_id, prompt) VALUES (:user_id, :prompt);",

{"user_id": user.user_id, "prompt": prompt}

)

session.commit()

st.write("Saved!")

Summary

In this guide, we've explored how to build powerful internal AI tools using Streamlit. We've covered loading and visualizing data, running prompts on that data, adding authentication with PropelAuth, and even saving user-generated prompts. By leveraging these techniques, you can create robust, secure, and interactive data applications that harness the power of AI for your organization's specific needs.